MOTIVATIONS

Physical exercise benefits people‘s health in many ways regardless of their age, gender, region and physical characteristics. We believe it is not enough to just monitor and report people’s physical activities, as most of the products do, to encourage people to exercise regularly. A proactive approach that directly rewards people’s exercises should be implemented. Nowadays, more and more health and life insurance companies would like to engage and reward their policyholders with hard cash to incentivize them to participate in more physical activities. Having an effective incentive program not only engages the participants but also lowers healthcare spending significantly. Our Physical Activity Reward System (PARS) provides a platform for users with a certified wearable device to participate in a reward program and trade their Proof of Physical Activities (PoPA) for healthcare benefits such as cost reduction and advantageous policy clauses. The platform uses Blockchain technology to provide immutable and trustworthy physical activity data transparently to both participants and sponsors. In other words, the participants receive their rewards via a decentralized algorithm that guarantees fairness and transparency; and the sponsors, such as healthcare insurance companies, can reward their customers directly without costly intermediate brokers.

Physical Activity Assessment for All Exercises

People’s physical activity is assessed based on a 24x7 continuous measurement of a set of biometric, motion and emotion sensor data. The assessment uses AI and Machine Learning to provide: a) raw measurement data personalization, b) Proof of Physical Activities (PoPA) and c) antifraud on bogus exercises.

The PoPA has a face value that indicates the effort of a user, which is computed by using a fair and published formula known by all users. Using PoPA, the PARS protects user privacy by not revealing any details of users’ exercises, such as time, location, and type of exercises. Only effort count is stored on the Blockchain where it is available for the sponsors to access.

PRODUCTS

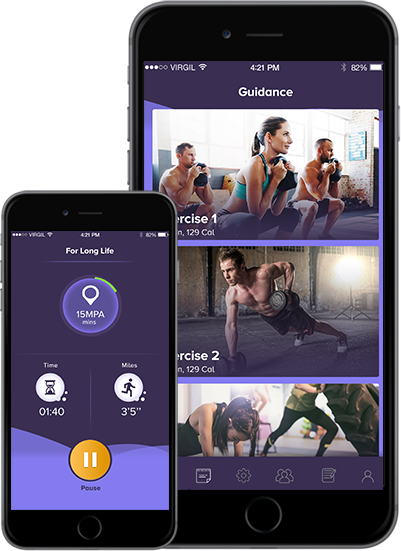

Our product is more than a wristband that tells you how many steps you walked. It provides a unified scale of measurement for various types of physical activities and determines overall positive contribution to your health.

FLL RWRD PRO

Professional FLL feature device

FLL RWRD EZ

Full FLL feature device

fitBit

FLL compatible device

iWatch

FLL compatible device

HOW IT WORKS

FLL customers get rewards for their physical activities.

User registration

BOB registers his new wearable device to FLL system server.

Exercise

Bob wears fitFLL device. His device performs “On-device fraud detection”, and forms physical activity data blocks.

Synchronize

Bob's device synchronizes the physical activity data to his mobile phone, performs "in-App AI personalization and fraud detection" , forms and submits reward claims to the distributed servers.

Decentralization

The decentralized Apps processe the reward claims, performs "distributed consensus on fraud detection", creates transactions containing system reward to qualified claims.

Vendor registration

Insurance Co. registers to FLL website.

Policy management

Insurance Co.“verifies Bob's policy”, submits a vendor reward payment request for Bob's physical activity to blockchain.

Transactions

The distributed server creates a transaction with a vendor reward to Bob's physical activity claims. The system retains transaction fees for vendor rewards.

Benefits

Bob gets rewards and Vendor gets bebefits.

OUR TEAM

Wonderful People with Wonderful Experience in a Wonderful Idea.

Sam Peng

Max Mansoubi

Jon Li

Amy Wang

Alan Xu

Meriem Benmmlouk

OUR CORPORATE WELLNESS PARTNERS

We partner with leading health and corporate wellness providers, foundations and health and fitness clubs, 3rd party health and fitness product and services providers to encourage people by impartially rewarding the physical activities.

Get in Touch

Any question? Reach out to us and we'll get back to you shortly.

DOWNLOAD THE APP